Blog

25.Mar.2024

Breakthrough Discoveries at the 2024 GTC AI Conference

The NVIDIA GTC 2024 event took place at the San Jose Convention Center from March 18-21. The conference featured more than 300,000 registrants who attended either in person or virtually. The event included over 900 sessions, 300+ exhibits, and more than 20 technical workshops covering a range of topics including automotive, generative AI, healthcare, inception, robotics, and XR. The keynote was delivered by NVIDIA's founder and CEO, Jensen Huang, at the SAP Center on March 18. This year marked a significant return to in-person events for the conference, which has evolved from a local hotel ballroom gathering to one of the world's most important AI conferences.

Key Highlights of the 2024 GTC AI Conference:

1. Blackwell Platform and GPUs

2. Omniverse Cloud APIs and Digital Twin Integration

3. Project GR00T and Robotics

4. From cloud to the Edge AI architecture

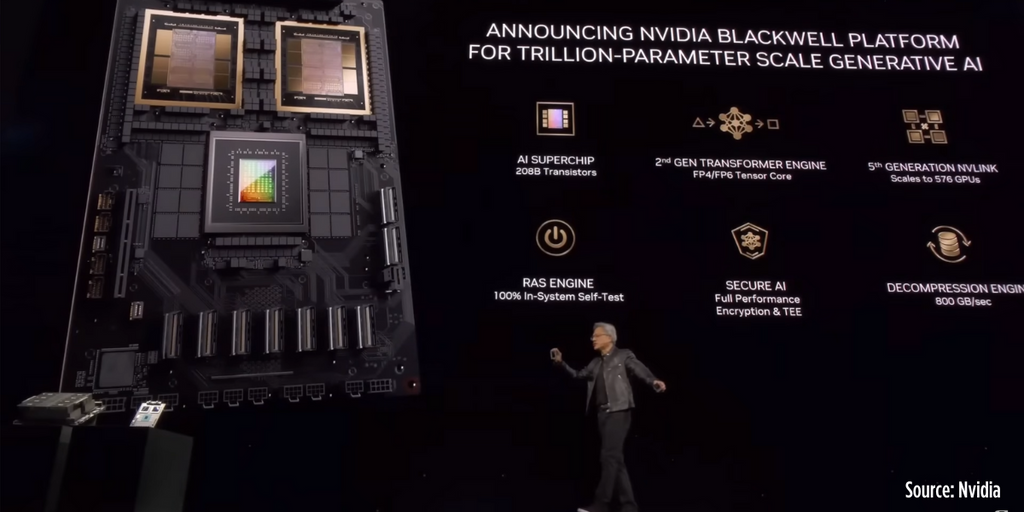

1. Blackwell Platform and GPUs

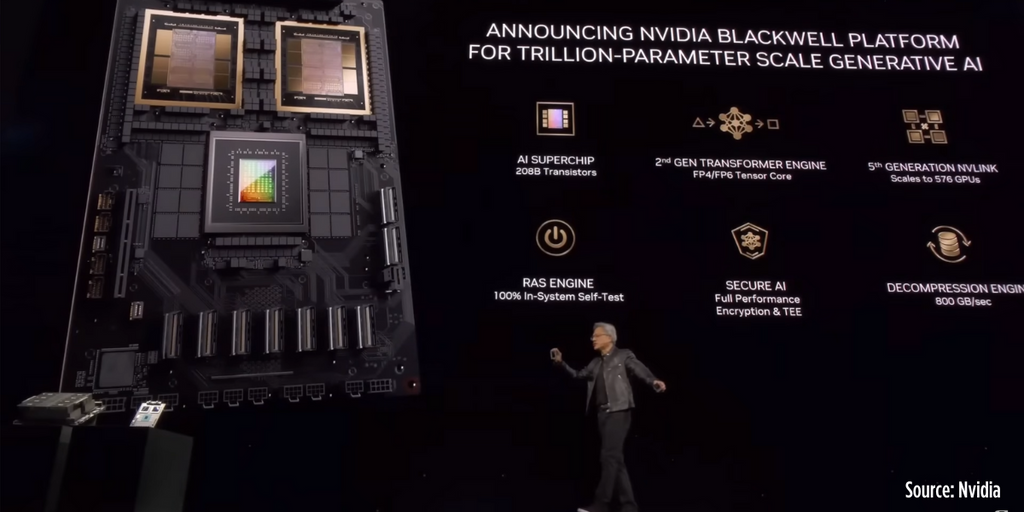

NVIDIA's Blackwell platform introduces the GB200 "superchip," establishing a new benchmark in AI technology. This powerful chip combines two B200 Tensor Core GPUs with the NVIDIA Grace CPU, drastically cutting energy and operational costs while significantly boosting computational speed and efficiency.

Key Features:

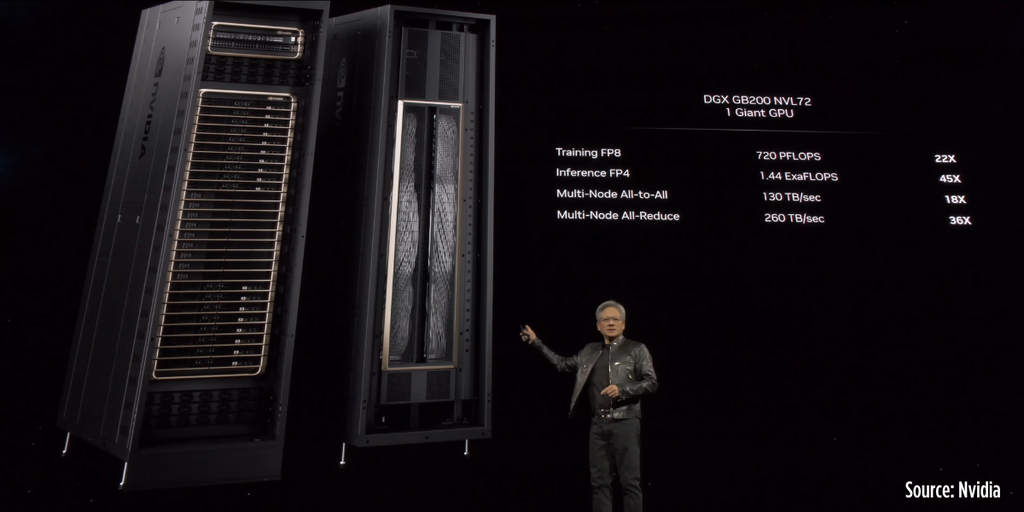

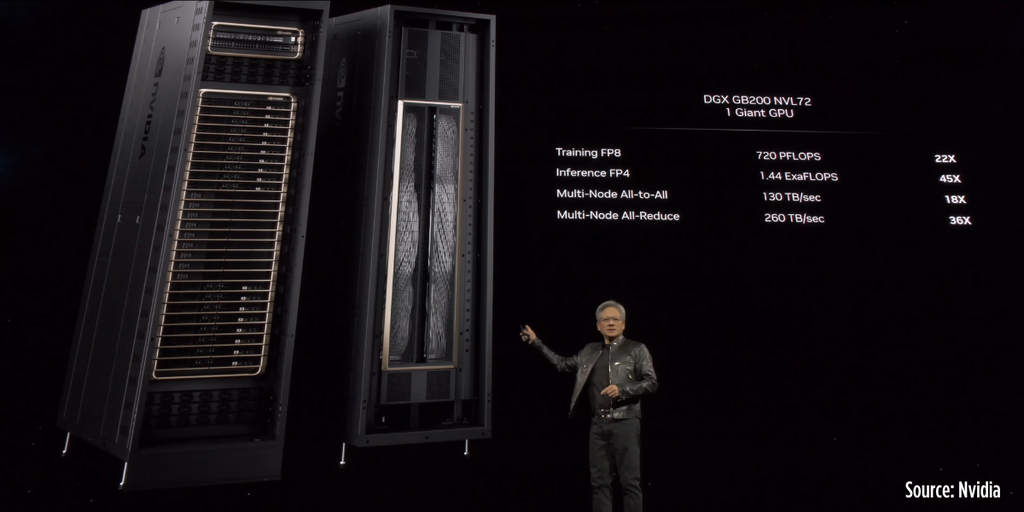

Boosting Generative AI with the Blackwell-Powered DGX SuperPOD

“The world’s first one exaFLOPS machine in one rack” – Jensen Huang

DGX SuperPOD is capable of housing 72 Blackwell GPUs and 36 Grace CPUs, it's capable of training models with up to 27 trillion parameters (30x performance increase compared to the NVIDIA H100 GPUs for LLMs.

2. Omniverse Cloud APIs and Digital Twin Integration

NVIDIA's Omniverse is transforming 3D collaboration and digital twins, diving into new markets with innovative APIs for easier CAD and CAE integration. Emphasized by NVIDIA’s CEO, Jensen Huang, digital twins are becoming integral to the manufacturing landscape, with the potential to digitize the heavy industries sector valued at $50 trillion. Omniverse stands out as the core platform for creating and managing realistic digital twins, powered by generative AI.

Key Highlights:

4. The Next Wave of Robotics AI is just around the corner

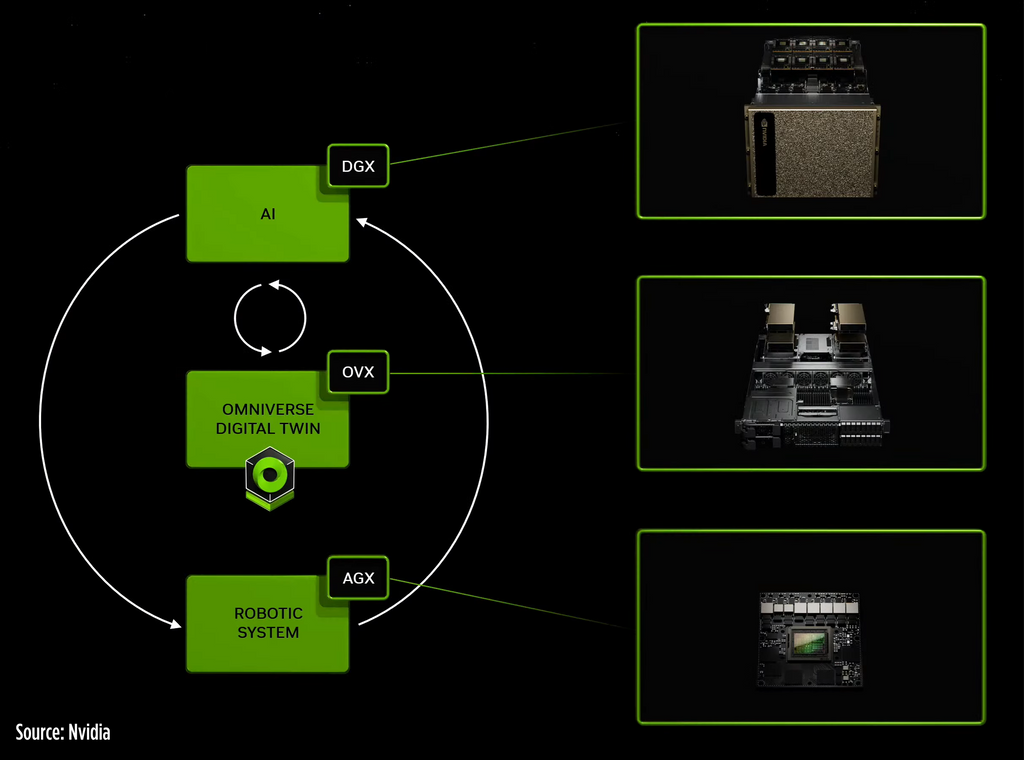

The dawn of advanced Robotics AI is upon us, requiring a structured approach across three critical computing platforms for seamless integration and functionality:

NVIDIA build this framework to propelled the AI deployments into the physical world. Training the AI model from the DGX data centers, conducting the simulation on the OVX cloud for real-life simulations, then deploying the AI into the edge through NVIDIA Jetson Orin SoCs that are dedicated for robotics and mobile vehicles. This framework ensures a harmonious blend of virtual development and tangible implementation, setting the stage for a new era in robotics AI.

Key Highlights of the 2024 GTC AI Conference:

1. Blackwell Platform and GPUs

2. Omniverse Cloud APIs and Digital Twin Integration

3. Project GR00T and Robotics

4. From cloud to the Edge AI architecture

1. Blackwell Platform and GPUs

NVIDIA's Blackwell platform introduces the GB200 "superchip," establishing a new benchmark in AI technology. This powerful chip combines two B200 Tensor Core GPUs with the NVIDIA Grace CPU, drastically cutting energy and operational costs while significantly boosting computational speed and efficiency.

Key Features:

- Efficiency: The Blackwell GPU operates at 25 times less cost and energy than its predecessor, the Hopper GPU.

- Performance: It delivers four times the training speed and thirty times the inference throughput compared to the H100 GPU.

- Scalability: Supports up to 576 GPUs with enhanced communication capabilities via the new 5th-gen NVLink.

- Technological Advancements: Boasts systemic improvements in generative AI with up to 30× better performance and power efficiency.

| Feature | Hopper GPU | Blackwell GPU |

| GPU Memory | Up to 188GB | Up to 384GB |

| Memory Bandwidth | Up to 7.8TB/s | 16 TB/s (Aggregate) |

| FP16/BF16 Performance | 3.9 PFLOPS | 10 PFLOPS |

| FP64 Performance | 60 TFLOPS | 90 TFLOPS |

| Energy Efficiency | Lower compared to Blackwell | Higher compared to Hopper |

| AI Performance | Lower compared to Blackwell | 5x the AI performance of Hopper |

| No. of Transistors | 80 billion transistors | 104 billion transistors x2 |

| Platform Technology | TSMC 4N process | TSMC N4P process |

Boosting Generative AI with the Blackwell-Powered DGX SuperPOD

“The world’s first one exaFLOPS machine in one rack” – Jensen Huang

DGX SuperPOD is capable of housing 72 Blackwell GPUs and 36 Grace CPUs, it's capable of training models with up to 27 trillion parameters (30x performance increase compared to the NVIDIA H100 GPUs for LLMs.

| Feature | Hopper | Blackwell |

| Platform | Hopper-based System | Blackwell-powered DGX SuperPod (GB200 NVL72) |

| Training Time for GPT-MoE-1.8T | 90 Days | 90 Days |

| Number of GPUs | 8000 GPUs | 2000 GPUs |

| Power Usage | 15MW, around 25,000 Amperes | 4MW, 1/4th the Power |

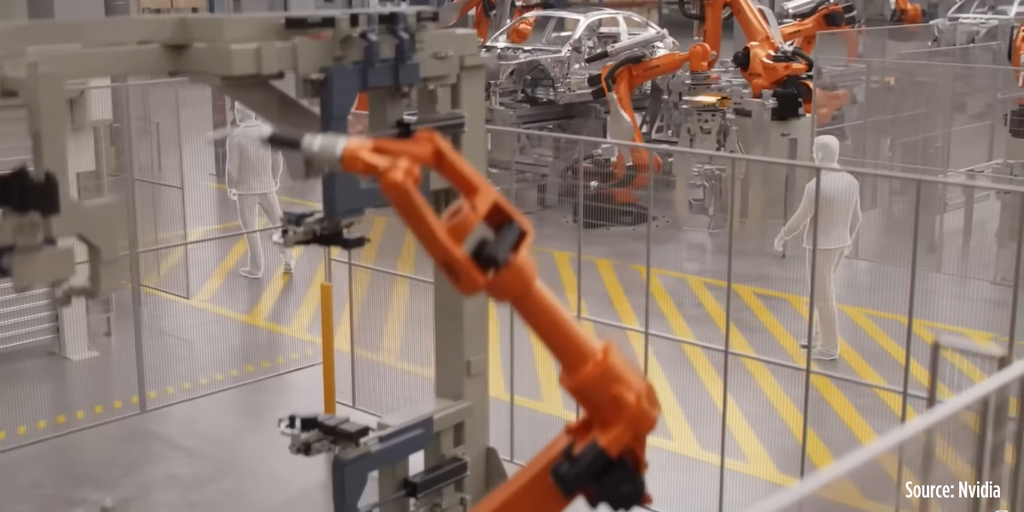

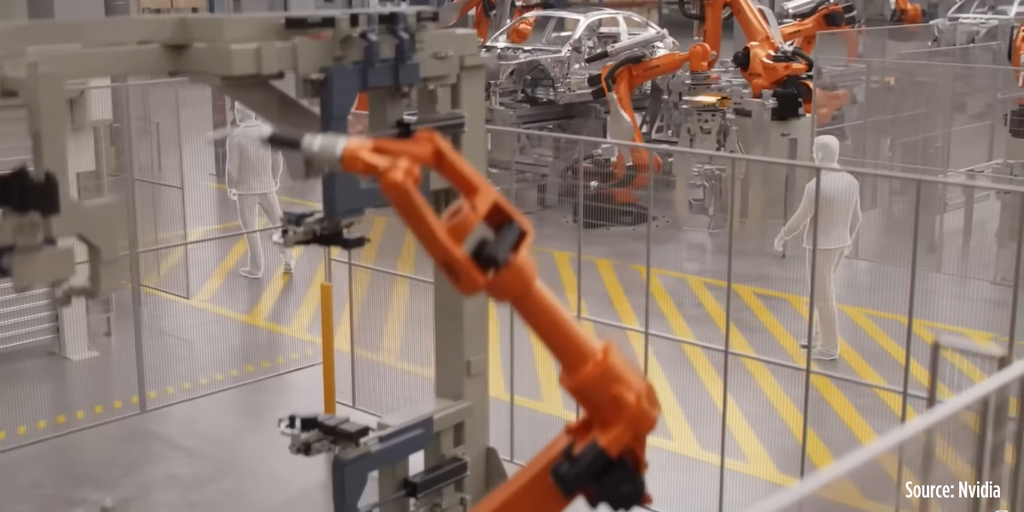

2. Omniverse Cloud APIs and Digital Twin Integration

NVIDIA's Omniverse is transforming 3D collaboration and digital twins, diving into new markets with innovative APIs for easier CAD and CAE integration. Emphasized by NVIDIA’s CEO, Jensen Huang, digital twins are becoming integral to the manufacturing landscape, with the potential to digitize the heavy industries sector valued at $50 trillion. Omniverse stands out as the core platform for creating and managing realistic digital twins, powered by generative AI.

Key Highlights:

- API Expansion: Enhances tool integration.

- Manufacturing Vision: Predicts universal digital twin adoption.

- Omniverse’s Role: Central to digital twin creation.

- Data Center Optimization: Showcases digital twin utility in upgrading to GB200 platforms.

- Visualization & Accuracy: Offers superior CAD data representation.

- Efficiency in Design: Powered by Omniverse APIs for faster construction and tech implementation.

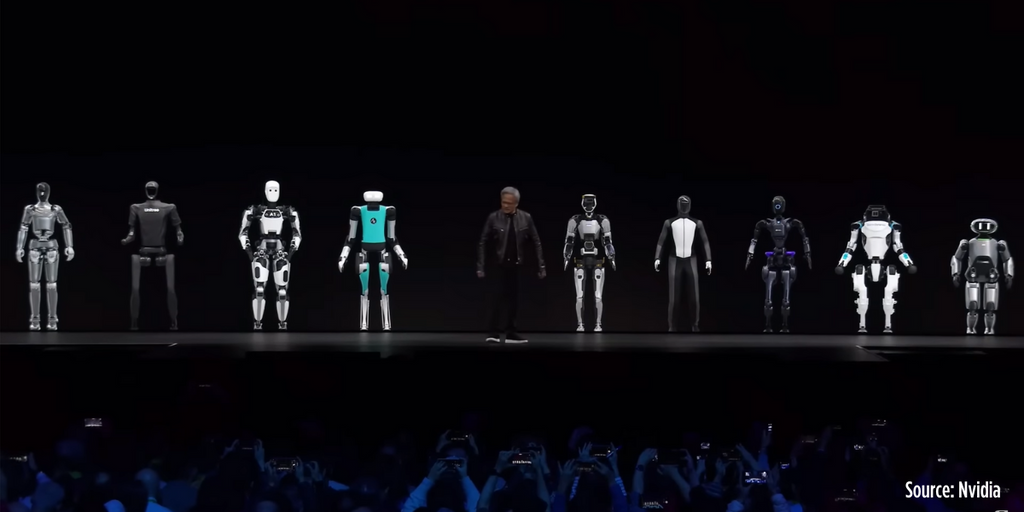

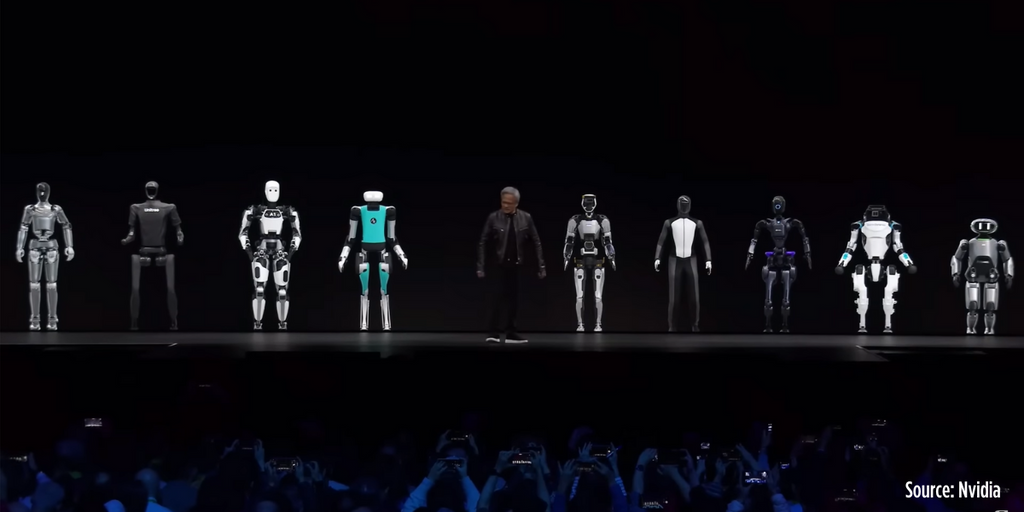

3. Project GR00T and Robotics

NVIDIA has launched Project GR00T, a foundational model aimed at revolutionizing humanoid robotics, along with substantial enhancements to its Isaac Robotics Platform. The announcement, made during GTC, introduces the advanced Jetson Thor robot computer and updates including new training simulators, generative AI foundation models, and improved perception and manipulation libraries powered by CUDA.

NVIDIA has launched Project GR00T, a foundational model aimed at revolutionizing humanoid robotics, along with substantial enhancements to its Isaac Robotics Platform. The announcement, made during GTC, introduces the advanced Jetson Thor robot computer and updates including new training simulators, generative AI foundation models, and improved perception and manipulation libraries powered by CUDA.

Key Highlights:

- Project GR00T: A new foundational model for developing general-purpose humanoid robots.

- Jetson Thor: A cutting-edge robot computer for complex tasks, leveraging NVIDIA's Thor SoC.

- Isaac Robotics Platform Updates: Enhancements include AI-driven models and tools for better robotics simulation and workflow.

- Partnerships and Impact: Collaboration with leading robotics companies to integrate these technologies and address global challenges through embodied AI.

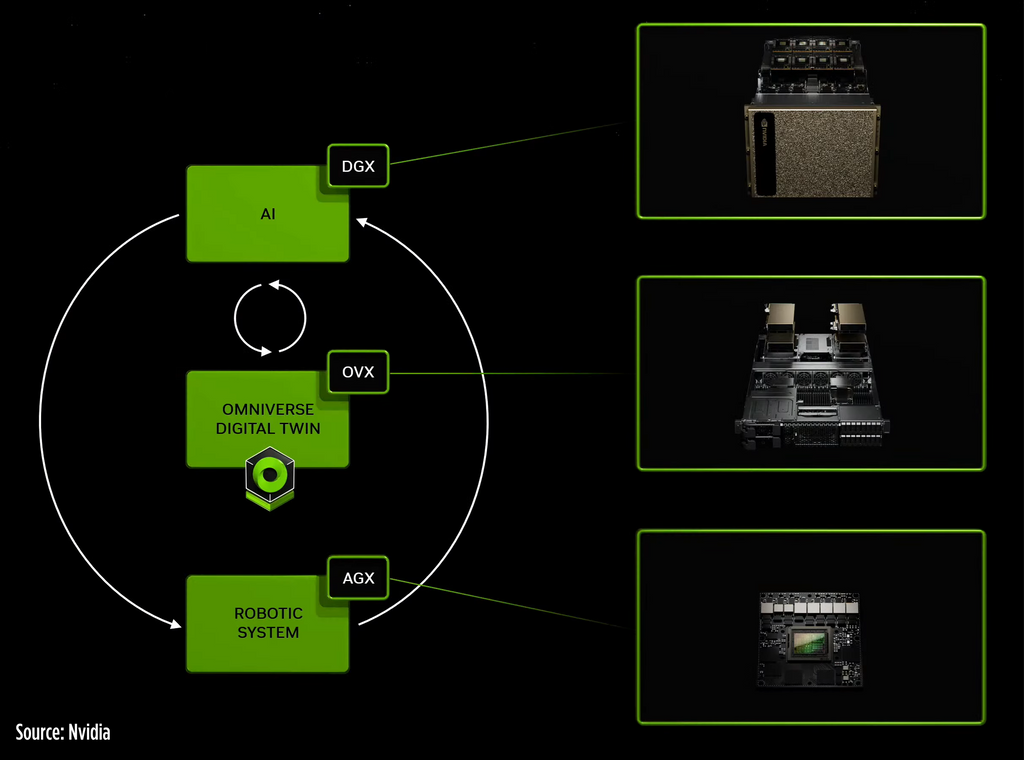

4. The Next Wave of Robotics AI is just around the corner

The dawn of advanced Robotics AI is upon us, requiring a structured approach across three critical computing platforms for seamless integration and functionality:

- AI Training with DGX Cloud: Utilizing DGX for AI training within the DGX Cloud, crafting sophisticated AI models.

- Omniverse Simulation with OVX Cloud: A Simulation Engine in the Omniverse, hosted on Azure Cloud, for detailed digital twin simulations.

- Edge AI Deployment: The Jetson Orin SoCs are designed for low-power, high-efficiency AI applications and sensor processing. Perfect for real-world robotics, AGVs, and AMRs deployments.

NVIDIA build this framework to propelled the AI deployments into the physical world. Training the AI model from the DGX data centers, conducting the simulation on the OVX cloud for real-life simulations, then deploying the AI into the edge through NVIDIA Jetson Orin SoCs that are dedicated for robotics and mobile vehicles. This framework ensures a harmonious blend of virtual development and tangible implementation, setting the stage for a new era in robotics AI.