Home

News&Events Knowledge Center GPU Inference Analysis: A Perspective at the Rugged Intelligent Edge

Knowledge Center

01.Jun.2020

GPU Inference Analysis: A Perspective at the Rugged Intelligent Edge

To alleviate these restrictions, edge computers are deployed closer to their varied inputs to quickly and efficiently process data to preserve system responsiveness. This hardware intervention allows organizations with distributed systems in challenging settings to use AI to leverage rich situational insight and performance enhancements for greater quality control, safety, security and efficiency. But to bring DL to rugged edge computers, DNNs need to be trained through repeated exposure to varied inputs to achieve a compute cost-efficient DL model capable of independently learning and making accurate predictions from limited input. This hardware-based refinement of the deep neural network is called machine learning inference.

How are Deep Learning Models Created?

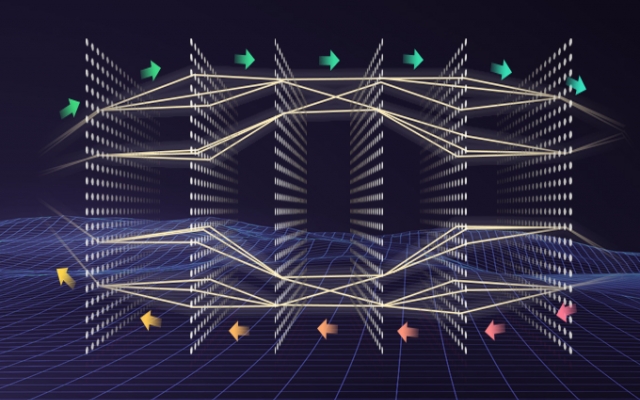

DL models are formed through inputting batches of specialized data geared toward training the artificial neural network to classify and process certain aspects or properties. DL models are trained to process inputs in ways similar to human learning processes. Inputs are transformed at each neural layer before passing to the next where they are further transformed. The accumulation of transformations allows the neural network to learn many layers of non-linear features like edges and shapes. Connections between layers are weighted according to the sum of the inputs, improving the accuracy of the intended outputs. Feedback for incorrect outputs is routed back through the layers, adjusting the weighted connections accordingly to reduce the probability of repeated errors, further honing the DL model.By training the neural network with adjustments to the connection weights, the DL model is streamlined to reduce the compute cost for the GPU. The trained DL model can then be deployed at a hardware level to act independently, trusted to exercise cognitive inference analysis based on its tuned reinforcement of experience. Machine learning inference can further improve the DL models deployed at the edge, allowing them greater accuracy and efficiency to reduce system workload cost. By pruning unneeded processing parameters and reducing network complexity, the optimization can be customized according to the resource availability of the edge nodes to maximize performance at each endpoint.

How Does Inference Analysis Inform the Intelligent Rugged Edge?

A DL model, once trained to meet the needed accuracy threshold, can be integrated in its most resource-efficient form on rugged edge computers to perform inference analysis of input data. Rugged edge computers deployed at the source of the data deliver robust processing capabilities in challenging, mobile, volatile or turbulent environments. Powered by rugged edge computer GPUs, the DL models are now capable of leveraging the training to produce accurate outputs with great efficiency and speed, requiring less input and resources to achieve its goals. And by using rugged edge devices for machine learning inference analysis tasks instead of looping in cloud resources, the system avoids latency and cloud availability issues that can hamper critical goals. These goals can vary greatly for rugged edge deployments according to the flavor of DNN integrated.A convoluted neural network delivers great speed and accuracy to recognizing shapes, features and textures of video input. This has wide implications for anything from machine vision quality inspections to biometric processing to barcode scanning. Long short-term memory networks are improved forms of recurrent neural networks. These have great processing agility that can be applied toward speech recognition, robot controls and forecasting. Long short-term memory networks can enable unsupervised learning that allows systems to generate new knowledge autonomously to appropriately process scenarios and inputs outside the realms of training. These models, as well as others, are capable of processing the same data inputs and working in conjunction to enable an intelligent rugged edge system.

Five Leading Applications for Rugged Edge Inference Analysis

1. Intelligent Surveillance

Inference analysis on rugged edge devices can instantly process and analyze data supplied by surveillance cameras and other connected peripherals. GPU-backed inference analysis drives real-time video analytics for captured visual data that can be applied to myriad safety, security and optimization tasks. A camera system deployed at a busy airport security touchpoint can use inference analysis to quickly perform facial recognition by cross-referencing a secure database of registered passenger images, providing faster, more accurate security and convenience than a manned gate can provide. Other parameters can be applied to the same video data to predict behavior, identify suspicious or unattended objects, and detect events that might require immediate security intervention. As the rugged edge computer processes more data, machine learning inference analysis tasks improve the DL model to perform recognition functions faster, from greater distances and in a variety of abnormal conditions.2. Intelligent Transportation Systems (ITS)

Rugged edge computers deployed at distributed IoT networks around connected smart cities receive myriad types of data that demand immediate processing to maintain efficient public systems. GPU-powered inference tasks can perform license plate recognition to facilitate toll collection, quickly identify and locate vehicles of interest, and monitor traffic patterns and commute frequency. This data can be routed to other city systems for further processing to synchronize traffic signal systems, public transportation scheduling, vehicle-to-everything communications and emergency dispatches to enhance the safety, efficiency and sustainability of smart city operations.3. Industry 4.0

Inference analysis in the industrial IoT (IIoT) has enormous implications for management of manufacturing and critical infrastructure systems. Rugged edge computers deployed at different distributed systems each specialize in inference analysis tasks that lead to safer, more streamlined industrial operations. Machine vision systems analyze visual imagery of products to instantly detect flaws or aberrations imperceptible to the human eye, ensuring high quality consistency throughout the manufacturing process. Machine learning inference is a great tool for metrology and inspection applications that determine defects in split seconds time which is seen in the video example below.4. Autonomous Vehicles and Fleet Management

Inference analysis enables machine learning for in-vehicle computers tasked with safely operating a motor vehicle autonomously or semi-autonomously. Robust parallel computations process video data from roadways containing information related to road conditions, hazards and anticipated maneuvers from other drivers to predict future outcomes and operate vehicle control systems safely and without crippling latency. Machine learning constantly refines system operability and responsiveness, preparing it to negotiate situations outside its training. Incoming data from ITS regarding weather, travel and traffic conditions can be processed in conjunction with GPS to optimize fuel consumption. Additionally, speech recognition parameters allow voice-activated controls for vehicle systems.5. Medical Imaging and Disease Diagnosis

Inference analysis supports medical AI applications with the ability to employ compute-intensive imaging and prediction models in mobile or remote settings. GPUs accelerate the generation of medical reports as well as segmentation and prediction models based on radiology and computed tomography scans by rapidly processing high-resolution, multi-dimensional images. Without the need to reduce image quality for speed, physicians are able to diagnose patients quicker and far greater accuracy outside the medical facility, leading to a better outcome for patients.GPU-Powered Computers from C&T's Inc. Deliver Inference Analysis at the Rugged Edge

C&T's industrial GPU computers bring intensive parallel compute power to virtually any rugged edge deployment. NVIDIA GeForce® GTX 1050 Ti graphics engine built on NVIDIA Pascal™ architecture enable responsive inference analysis close to the data origin to reduce network complexity and avoid latency that can impact time-critical applications. The industrial GPU computers support processors as powerful as RTX 2060 Super GPU, which provide triple the computation parallelism for inference analyses of far greater intensities.The RCO-6020-1050TI GPU computer and VCO series of machine vision computers are purpose-built for high-performance computing on challenging deployments. The industrial GPU computers are designed with Intel 6/7th Gen Processors for the best IIoT hardware performance. With improved processing and graphics performance, Intel CPUs are optimized for machine and computer vision by offloading graphics processing from primary CPU cores. Fanless, cableless architecture eliminates failure points that reduce computer lifecycles in rugged edge deployments. The devices are rated for endurance in high shock, vibration and turbulent environments like those in mobile and industrial settings. They boast wide operating temperature for exposure to heat and cold extremes, and wide voltage input ensures power adaptability in locations with uncertain or shifting voltages. Robust wireless connectivity features from Wi-Fi and 4G/LTE ensure availability of cloud resources for backups, data transmissions and operational redundancy in the most rugged edge deployment. TPM 2.0 hardware security and edge autonomy safeguard sensitive data and signals, allowing them to be processed without rerouting to potentially-compromised cloud resources.

As advancements in connectivity expand the IoT, organizations seeking to leverage the large influx of rich data toward safety, security, actionable insights and process optimization will further explore the rugged edges of their networks. C&T's hardened rugged edge computers and industrial GPU computers preserve mission immediacy with extended MTBF, strong processing and real-time responsiveness.