Blog

11.Jul.2023

AI-Based Video Analytics: What is it? How does it work?

AI-Based Video Analytics is widely used across industries in areas such as security and surveillance, food and beverage, retail, transportation and manufacturing and logistics just to name a few. According to a report by MarketsandMarkets Analysis (2022), it is a market that is expected to have a 20.9% CAGR by 2026 and with the wide-spread of Edge-AI technology, AI-Based Video will only become increasingly powerful in the years to come.

In this blog, you will find out:

- What is AI-Based Video Analytics;

- How it works;

- Some applications and purposes;

- Determine the hardware requirements for AI-Based Video Surveillance.

What is AI-Based Video Analytics?

AI-Based Video Analytics, also known as Video Content Analysis (VCA), Video AI or Intelligent Video, refers to the process of deriving actionable insights and conclusions from data gathered in the form of digital video.AI-Based Video Analytics simplifies and eases the burden of repetitive and tedious tasks of long-hour video observation by humans. AI can not only observe data but can also be trained with large volumes of video footage to detect, identify, categorize and automatically tag specific objects.

Overall, it is a tool used to assist humans in understanding video content, and make automated decisions based on observations made from the data collected.

Key Components of AI-Based Video Surveillance Systems

To fully understand AI-Video Analytics, let's take a quick look under the hood to see how it is made possible. The process combines two types of AI, that is Machine Learning and Deep Learning. But before that, let’s briefly define AI.

AI, Machine Learning, and Deep Learning

Artificial Intelligence

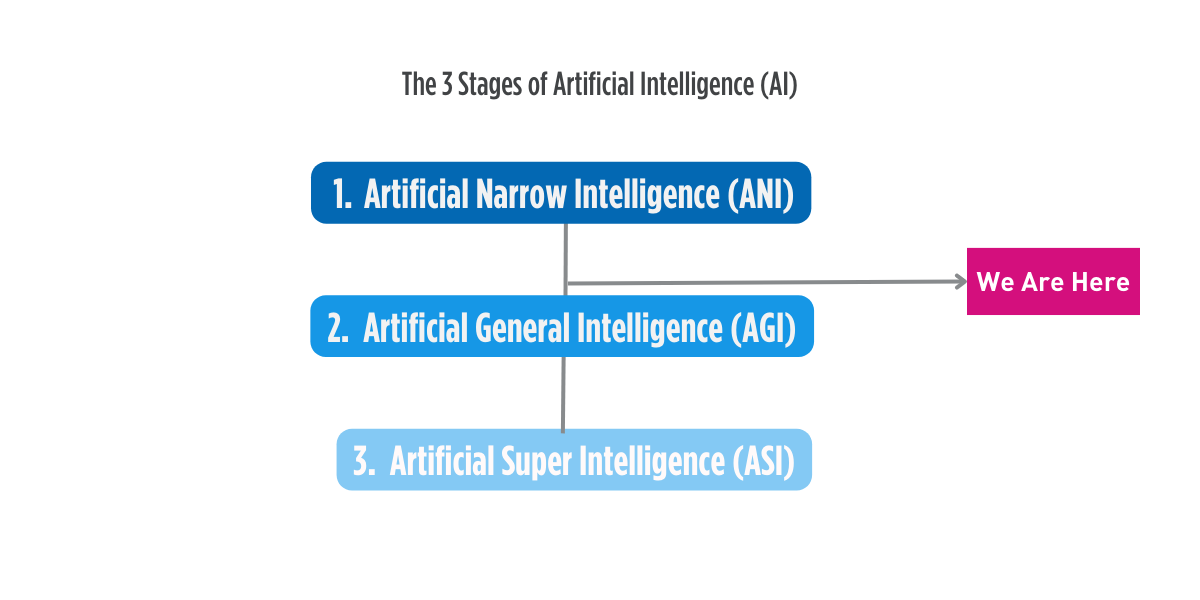

AI stands for Artificial Intelligence and is a field that uses computer science and data to problem solving in machines (Coursera, 2023). John McCarthy, also known as one of the founding fathers of artificial intelligence, defined AI in 1955 as “the science and engineering of making intelligent machines.” AI is a large topic and can be overwhelming to learn at a glance, so we will begin with the three stages of AI for now.

AI has multiple stages, which can be divided into three stages:

The first is Artificial Narrow Intelligence (ANI), where a computer can execute simple set of defined tasks, and those tasks only. Most of the AI powered tools we have at our disposal to this day fall under this category. E.g., speech or facial recognition.

Then, Artificial General Intelligence (AGI), at this stage AI is assumed to be able to make decisions and think independently, just like humans do. It is believed that this stage is fast approaching as we witness rapid advancements in social chatbots such as ChatGPT, capable of contextual reasoning and problem solving. This stage is more advanced than ANI and is comparable to human intelligence. One way to determine whether or not robots have achieved the same level of intelligence as humans is through executing the Turing Test by Alan Turing (1950). Scary? Exciting? We’ll let you decide.

Finally, there is Artificial Super Intelligence (ASI), wherein AI harnesses intelligence exponentially surpasses the intelligence level of a human by magnitudes, which is also predicted to be the technological singularity or “The Singularity”, where AI growth becomes uncontrollable and irreversible, causing unforeseeable impact to human civilization. We can reach a consensus on the level of fright this thought brings.

Machine Learning and Deep Learning

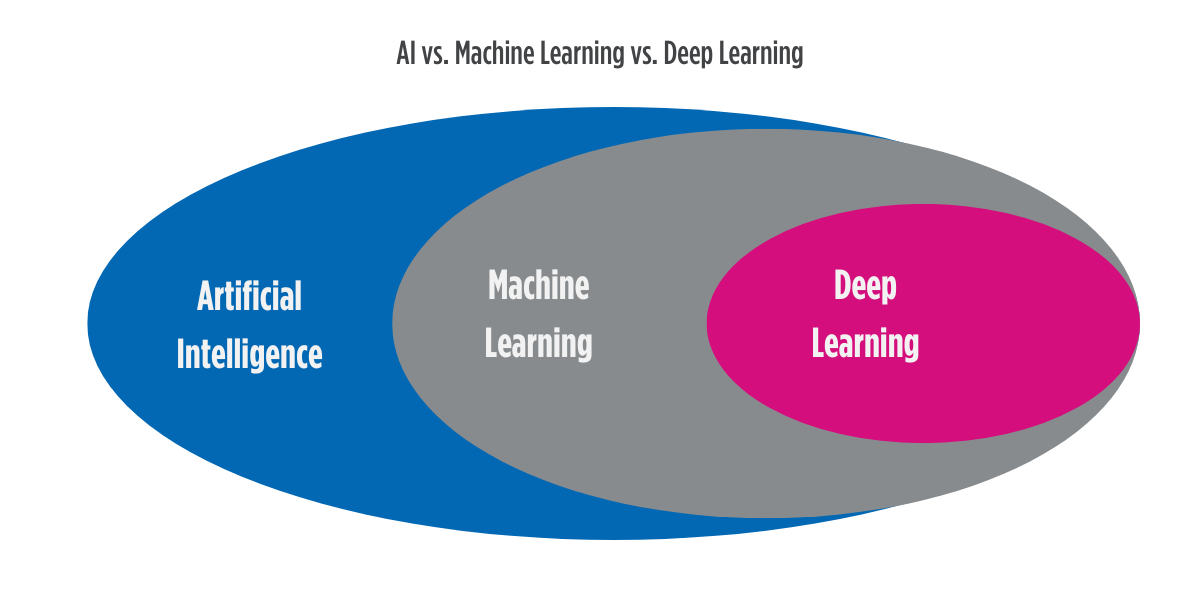

There are multiple subsets of AI, and subsets within those subsets, but the two required by AI-Video are Machine Learning and Deep Learning. The two terms are related fields within the broader domain of AI.

Ever wondered how the “Shows picked for you” tab is curated on Netflix?

Perhaps you noticed the movies on your feed after finishing a recent movie featured similar actors/actresses and belonged in the same genres. Or when the auto-play on YouTube put together a playlist of your favorite or go-to music. These are everyday examples of Machine Learning in action.

Machine Learning is a subset of artificial intelligence, where algorithms are used to analyze data, learn from the information that has been collected, then apply this knowledge to base future decisions upon with zero to minimal human interference.

Although Machine Learning can independently become an expert at specific functions, it requires humans to direct and guide the decisions made when deciding the output and requires intervention when it does not return accurate or desired feedback. This process is known as supervised learning.

So, instead of a human coding every decision that can be made by a computer, instead a computer is “trained” by collecting, processing and learning from the data and then coming up with decisions on their own.

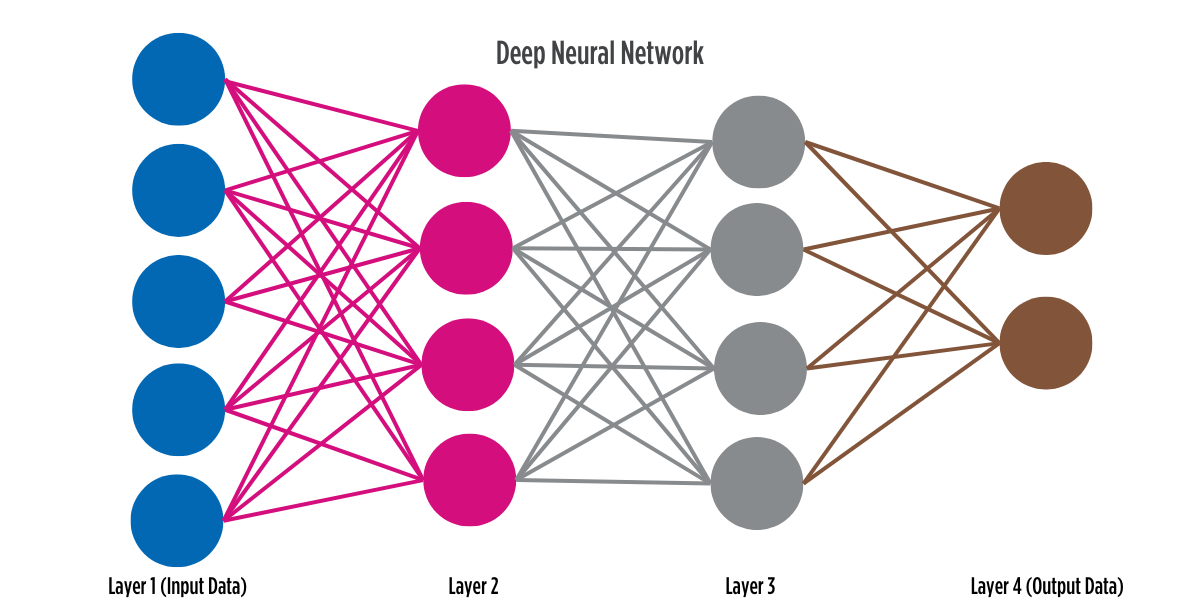

Deep Learning on the other hand, is a subset of Machine Learning, and uses artificial “neural networks” to mimic the learning processes of a human brain. Deep Learning mimics the way human brains processes information as it is in a non-linear fashion.

These artificial neural networks are made up of a concept called “layers” of algorithms and computing units, which make up artificial neurons. These neurons are the backbone of Deep Learning algorithms. One way to differentiate between Machine Learning and Deep Learning, you can look at the number of layers of data a computer must process. If it is more than three layers of processing (including input and output), it is considered Deep Learning.

Where Machine Learning can become an expert at a specified function through learning and analyzing pre-classified data, Deep Learning learns independently through the absorption of much larger, increasingly diverse and unstructured data sets in its raw form including photos, texts and numbers. Through continuous and large volumes of data input, deep learning algorithms can learn from observing patterns that occur in the clusters of data it collects.

Deep Learning does not depend on feature extraction like Machine Learning does, where the process of careful selection of relevant features by experts is specified. Deep learning can automatically learn and extract features directly from the raw data, which eliminates the need for manual feature engineering. Deep neural networks are designed to learn hierarchical representations of the data, automatically capturing useful patterns and features at different levels of abstraction.

Think of deep learning as a more advanced and developed version of Machine Learning, where it can handle more complex data sets and provide results that require no intervention from humans. By eliminating the need for engineers to explicitly code and categorize datasets, data processing becomes much faster and more efficient.

Learn more about Deep Learning here

How Do AI-Based Video Analytics work?

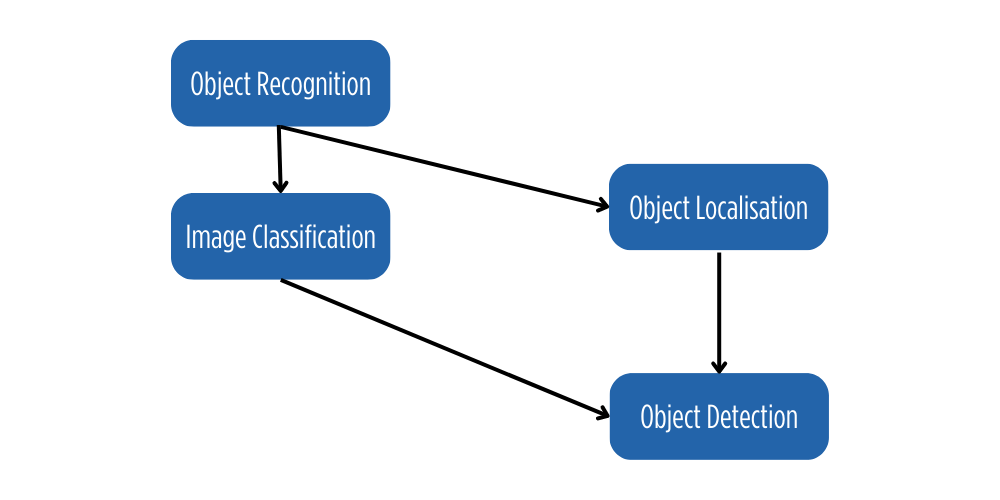

Now that we have covered the basics, let's move to the “how” and methods that AI-Based Video Analytics uses, which is a technology called “Object Recognition”. Object Recognition utilizes Deep Learning, and falls within the field of Machine Vision, a branch in computer science that studies how computers can “see”. Object Recognition

According to Jason Brownlee (2019), object recognition refers to a collection of related tasks for identifying objects in digital photographs.

The first task is image classification, where a class is predicted for an object in an image.

The second task is called object localization, where the location of objects in an image is identified with a bounding box and a class label.

Then object detection combines these two tasks, classifying and localizing objects detected in an image. When users use the term “Object Recognition” they often mean Object Detection. Object detection is a subset of object recognition.

Why use AI-Based Video Analytics?

AI-Based Video Analytics brings many benefits to enterprises including:

- Enhanced security and safety measures

- Optimizing operational efficiency

- Ensuring worker safety and health

- Incident investigation and analysis

Why Compute AI-Based Video Analytics at the Edge?

With the increased performance capacities of embedded devices, more data is being processed at the locations where the data is being collected, such as sensors and embedded systems. AI-Based Video Analytics is also run at the Edge instead of the Cloud for reasons including:- Reduced Latency. When AI-Based Video occurs at the edge, minimizing the time required to receive a response as there is no need to send the data back and forth from the cloud. This makes a drastic difference for situations when actions to be taken in real-time and improves conditions significantly in terms of security and surveillance, often why AI-Based Video is implemented in the first place.

- Increased Privacy and Security. There is reduced vulnerability as the analysis occurs right where the data is being collected, and not using the internet where the data may be hacked. This is an additional benefit when analyzing data that stores personally identifiable information such as facial recognition data.

- Bandwidth Efficiency. Enterprises can benefit from the bandwidth efficiency from computing at the edge as only necessary data that requires further processing is sent to the cloud. This frees up more bandwidth capacity and reduces data transfer costs and energy consumption.

- Offline Operation. Computing AI-Based Video at the edge can occur even without connectivity to the internet. This is particularly advantageous in areas where continuous connectivity may be interrupted or sometimes unavailable at remote locations.

- Real-time decision making. An attractive purpose for computing AI-Based Video Analytics at the edge is the ability for computers to independently calculate and carry out mission-critical decisions that may results in dire consequences if waiting for further analysis carried out at the cloud level.

- Enhanced Scalability. When AI-Video is being analyzed at the sensors themselves, this improves and makes room for scalability as it allows for more devices to carry the load of data processing and analysis. Enabling parallel task execution across multiple edge devices eases the load and distributes tasks efficiently.

What do Industrial Computing Systems need to run AI Video?

Often, computers tasked with AI-Based Video Analytics applications are placed in unfavorable harsh environments, these include remote grounds where there is high levels of dust and air-borne particles, shaky or moving grounds nearby other nearby moving objects, or outdoor environments with oscillating temperatures and drastic changes in climate, and even exposure to high levels of humidity or air-sprays. These conditions apply additional pressure on these systems on top of the software-compatibility requirements for ongoing and demanding levels of data-processing involved in AI-Video.Hardware Accelerators for AI-Based Video Analytics

Harsh conditions at the edge call for systems that can reliably operate 24/7 without interruptions as they carry out mission critical functions, such as fanless, ruggedized industrial computers.There are specific hardware requirements to consider for optimal performance and satisfactory results when utilizing AI-Based Video Analytics in your industrial applications.

Firstly, a powerful processor is essential for handling the complex computations involved in AI algorithms. Industrial computing systems that are used for AI-Based Video Analytics typically include GPUs (Graphics Processing Units) for advanced image processing capabilities.

Dedicated AI accelerators like ASICs (Application-Specific Integrated Circuits) such as Google’s Tensor Processing Unit (TPU) and HAILO accelerate embedded deep learning applications on edge devices. FPGAs (Field-Programmable Gate Arrays) are also commonly used for efficient parallel processing.

Then, ample memory, both RAM and storage is needed to store and access large amounts of data efficiently. High-speed storage solutions, such as NVMe SSDs (Non-Volatile Memory Express Solid-State Drives), facilitate quick data retrieval.

Lastly, compatibility with AI language models and software frameworks ensures smooth integration and utilization of AI capabilities.

Ruggedized Industrial Computers for AI-Based Video Analytics

A modular, stackable, two-piece brick design enables a fanless, ruggedized Industrial PC with hot-swappable NVME SSD, the RCO-3000 & 6000 Series enables object detection and high-powered AI-Video Analytics whilst generating a display output in real-time. This well-thought-out design comes with built-in security features, making upgrades and repairs easily accessible to operators without the need to replace an entire computing system. The RCO Series is a competitive solution for machine vision tasks in any harsh industrial environment.

Explore RCO-3000-CML Compact Fanless Computer

Explore RCO-6000-CML Edge AI Inference Computer